- July 10, 2025

- by Anoop Jain

The Model-Context Protocol (MCP): The Missing Link in Scalable AI Tool Integration

Why MCP Is a Game-Changer for AI in 2025

AI is no longer just about smarter models—it’s about smarter integrations. In 2025, enterprise adoption of large language models (LLMs) like GPT-4o and Claude 3 is exploding, but integration remains the biggest barrier.

Enter the Model-Context Protocol (MCP)—a new open standard that transforms how LLMs interact with your tools, APIs, and internal systems.

🧠 Think of MCP as the HTTP of tool-augmented AI.

What Is MCP?

The Model-Context Protocol (MCP) is a standardized way for tools to expose their capabilities to AI models using:

- A JSON schema to describe inputs/outputs

- A universal, model-agnostic endpoint definition

Developed by Anthropic (makers of Claude), MCP enables tools to work across multiple AI models without rewriting code or building custom plugins for each model.

Why Is MCP Needed?

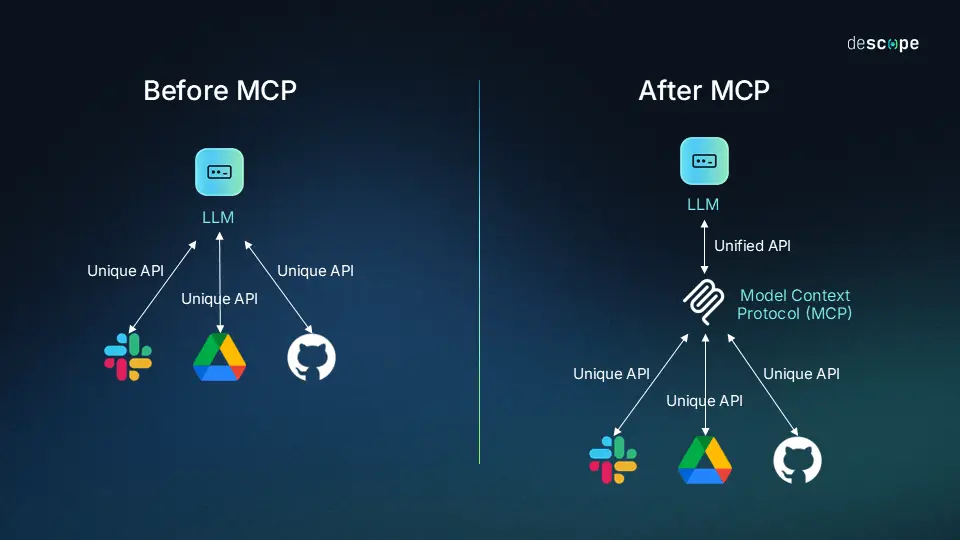

Before MCP, integrating tools with AI models looked like this:

- 🔁 One plugin per model × per tool = M × N integrations

- 🚧 High engineering effort

- ⚠️ Inconsistent context passing

- 🧩 No interoperability

Now, with MCP:

- 🛠️ One tool = One MCP-compliant endpoint

- ⚙️ M tools + N models = M + N integrations

- 🔄 Seamless switching between GPT, Claude, Mistral, LLaMA, and more

This means faster AI integrations, reusable tool logic, and scalable deployments.

Real-World Benefits

✅ Reduced Development Time

A benchmark from a major enterprise AI team showed:

- 🕐 Old integration time: 18–22 hours

- ⚡ With MCP: Under 2 hours (with tests)

🔁 One-Time Setup

Expose your APIs with JSON + POST — no SDKs or proprietary plugins required.

🌐 Cross-Model Compatibility

Write once, integrate with:

- OpenAI GPT-4o

- Anthropic Claude 3

- Meta LLaMA models

- Mistral, Groq, open-source agents

Use Cases: How MCP Unlocks Value

Industry | Use Case Example |

Healthcare | LLMs calling EHR or lab APIs for real-time decision support |

Fintech | AI analyzing transactions via secure bank APIs |

HR Tech | Chatbots processing leave requests in HR systems |

DevOps | Claude triggering alerts via system monitoring endpoints |

E-commerce | GPT auto-updating product descriptions from inventory tools |

🛠️ MCP makes your enterprise tools AI-augmented without full rewrites.

Developer Workflow: What Changes?

Before MCP:

- 1 model → 1 custom plugin per tool

- Tool logic duplicated across vendors

- Rewrites needed after each model update

After MCP:

- 1 JSON schema + 1 POST endpoint per tool

- Plug-and-play across all LLMs

- Update once, support all

Strategic Insight: The Next AI Advantage Is Interoperability

AI models are becoming commoditized. Your competitive edge lies in:

- ⏱️ Faster AI deployment

- 🔌 Better tool integration

- ⚒️ Lower engineering costs

- 📈 Scalable product growth

MCP isn’t just an integration trick—it’s a business enabler for the AI-native economy.

Final Takeaway: Don’t Let Tooling Hold Back Your AI Potential

Whether you’re building a co-pilot, a multi-agent orchestration platform, or AI-powered SaaS, MCP is the new standard to watch.

It makes your tools:

- 📡 Discoverable

- ⚙️ Plug-and-play

- 🔁 Reusable across models

- 🔒 Secure for enterprise use

Next Steps

- ✅ Make your APIs MCP-ready

- 🧱 Use standard JSON schemas

- 🚀 Join open-source communities building MCP agents

The AI future isn’t single-model. It’s multi-model, multi-tool—and MCP makes that future possible.

Read these blogs by gNxt Systems. They might interest you:

Why Every Digital Transformation Strategy Needs AI Agents

The Future is Agentic: Preparing Enterprises for the Next Wave of AI Evolution

What Is Model Context Protocol (MCP) and Why It Matters for AI Developers in 2025

Also click this link to check out Agentic AI Offerings by gNxt Systems

About Author

CEO at gNxt Systems

with 25+ years of expertise, Mr. Anoop Jain delivers complex projects, driving innovation through IT strategies and inspiring teams to achieve milestones in a competitive, technology-driven landscape.